Abstract

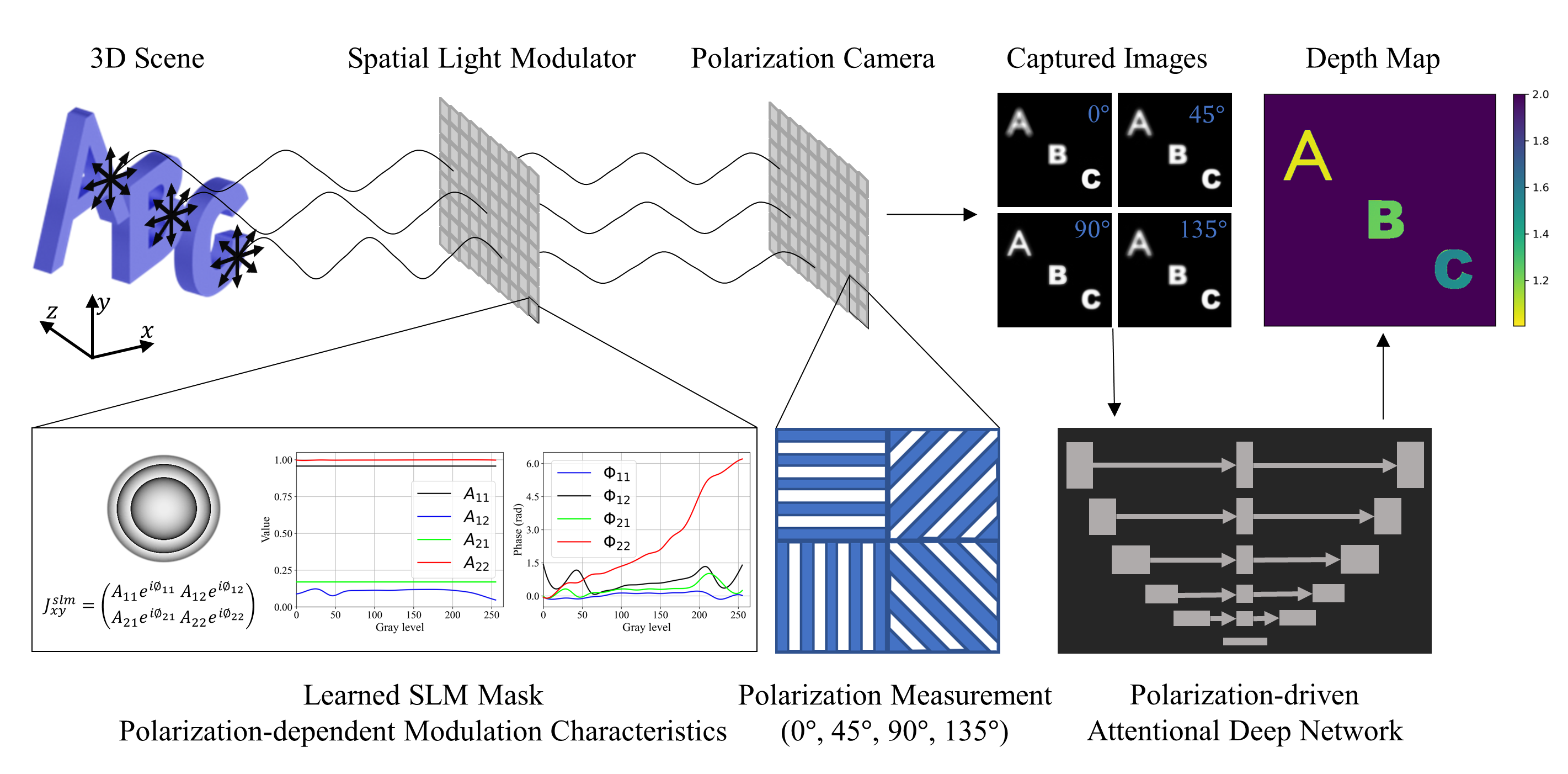

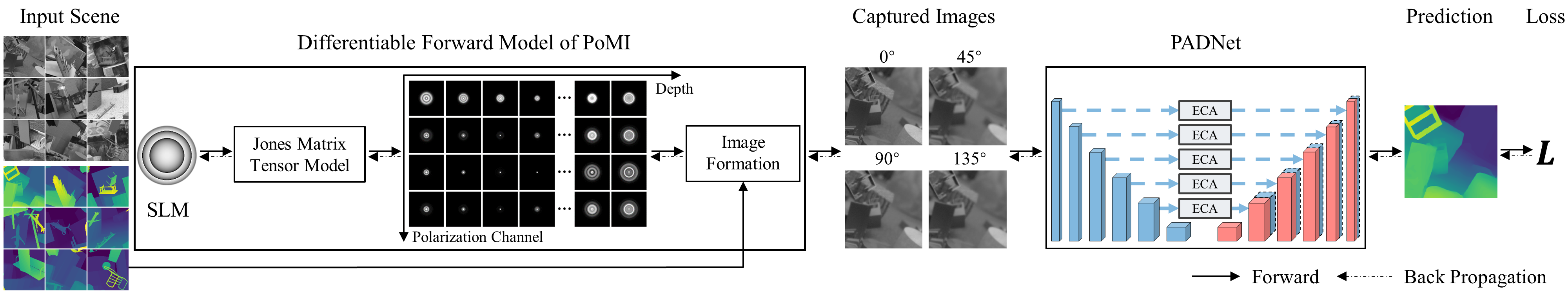

Estimating depth from a single snapshot image with defocus information is still a tricky problem for the ill-posedness introduced by the limited depth cues implied in the defocus images. This paper proposes a Polarization-multiplexed Modulation Imager (PoMI) to fully utilize the multiplexed polarization channels for capturing more depth cues with a single snapshot image. The polarization-dependent modulator, i.e., Liquid Crystal Spatial Light Modulator (LC-SLM), is applied to modulate the depth information into polarization channels. A differentiable polarization-dependent modulation camera model is proposed, combined with the Polarization-Driven Attention Network, to enable the joint system optimization by end-to-end training. Extensive tests have been applied to the synthetic datasets to verify the effectiveness of the proposed method. A system prototype is built to conduct real experiments demonstrating the feasibility of the proposed method for natural scenes.

Method

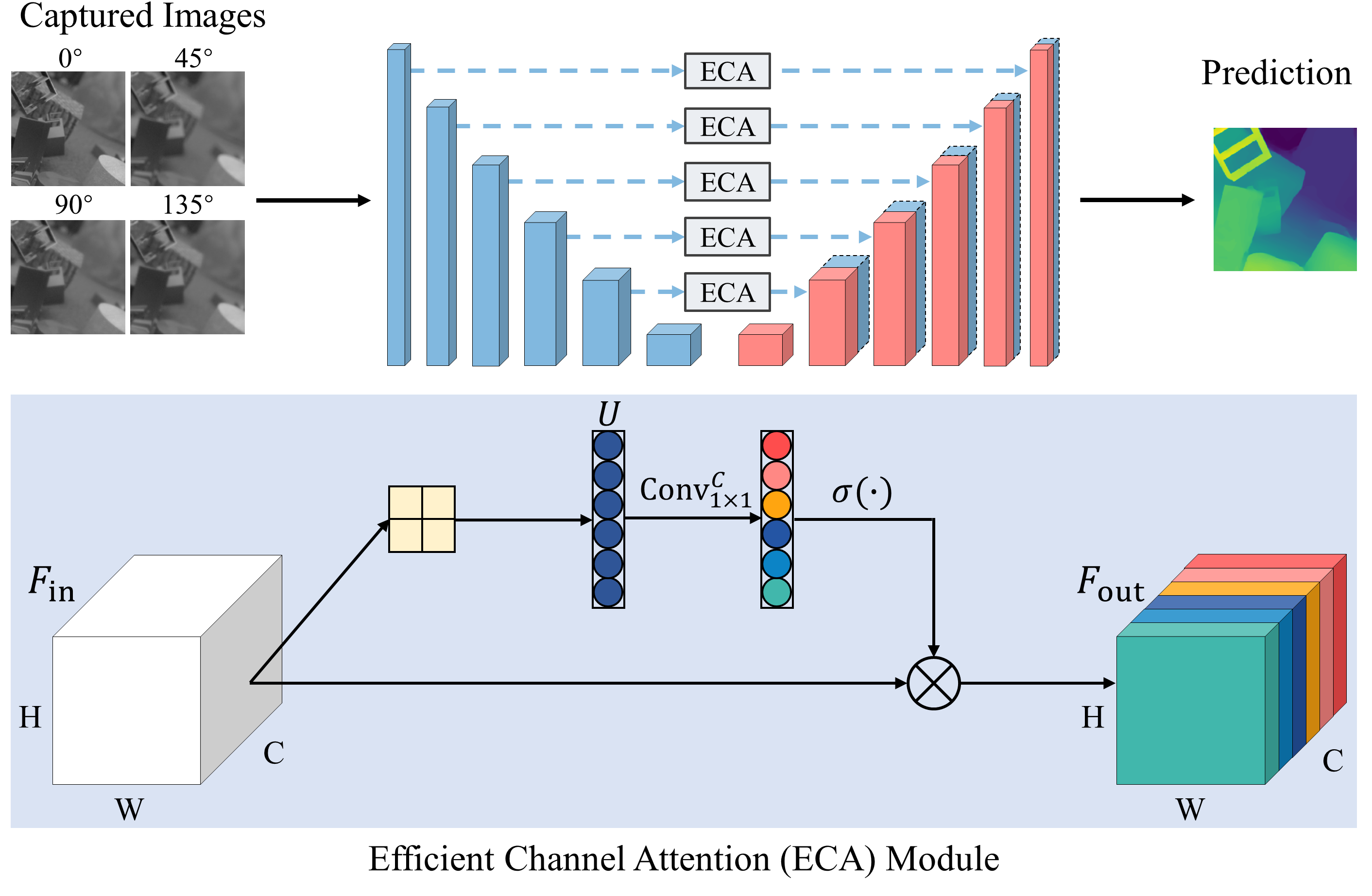

We propose a Polarization-multiplexed Modulation Imager (PoMI) system for depth imaging of natural scene, which multiplex the depth information into different polarization states with a single polarization-dependent modulator. A differentiable polarization-dependent image formation model is proposed with an end-to-end optimization framework, enabling the joint optimization of the modulation mask and the reconstruction neural network for depth estimation. To extract informative polarization features for depth estimation, a Polarization-driven Attentional Deep Network (PADNet) is proposed to process the coded polarization images. The simulation experimental results on two datasets (FlyingThings3D, NYU Depth v2) show that our method could outperform the state-of-the-art DfD approaches. Finally, we build a prototype imaging system and load the LC-SLM with the optimized modulation mask to conduct real experiments, demonstrating the effectiveness of the proposed method for natural scenes.

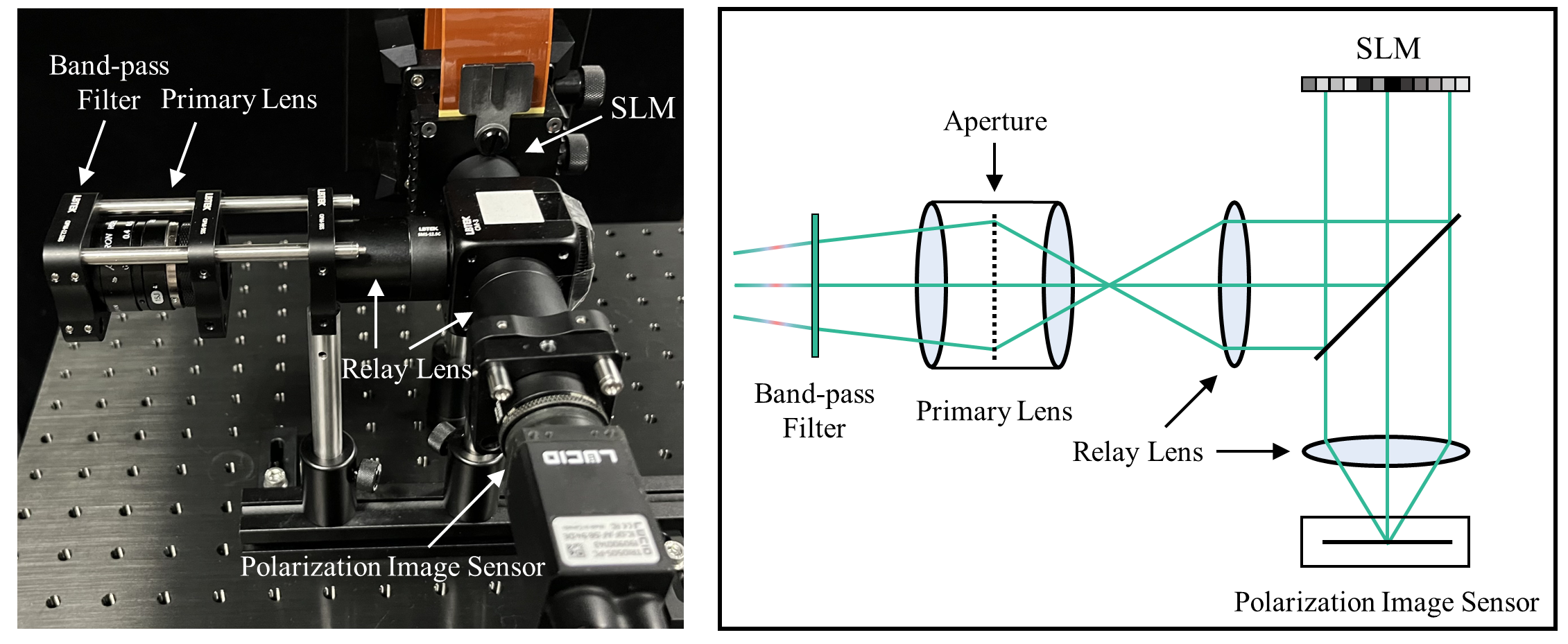

Figure 1. Schematics for the proposed Polarization-multiplexed Modulation Imager (PoMI) system.

Figure 2. Overview of the proposed end-to-end learnable PoMI system.

Figure 3. Polarization-driven Attentional Deep Network.

Experiments

Simulation Experiment

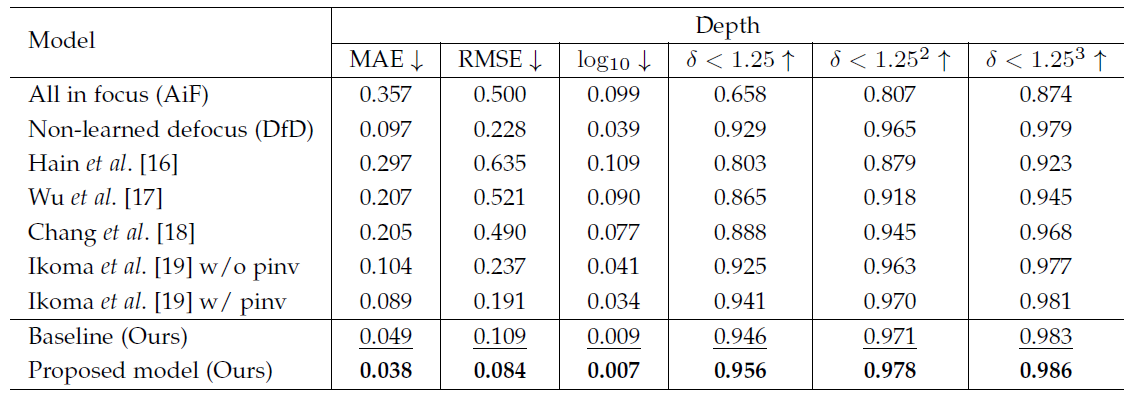

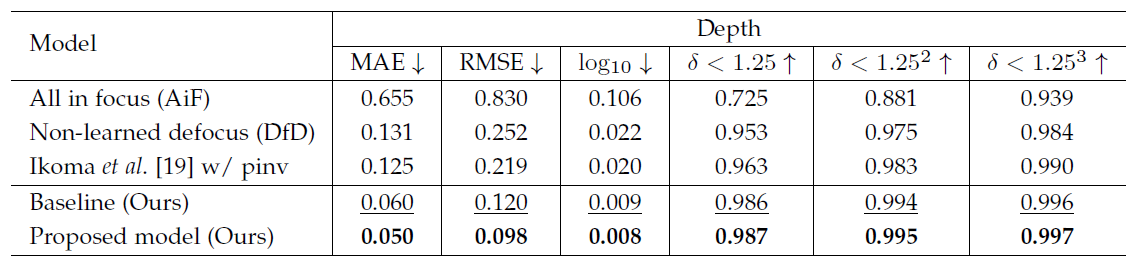

Table 1. Quantitative comparison of performances on the FlyingThings3D dataset.

Best results are in bold, second best are underlined.

Table 2. Quantitative comparison of performances on the NYU Depth v2 dataset.

Best results are in bold, second best are underlined.

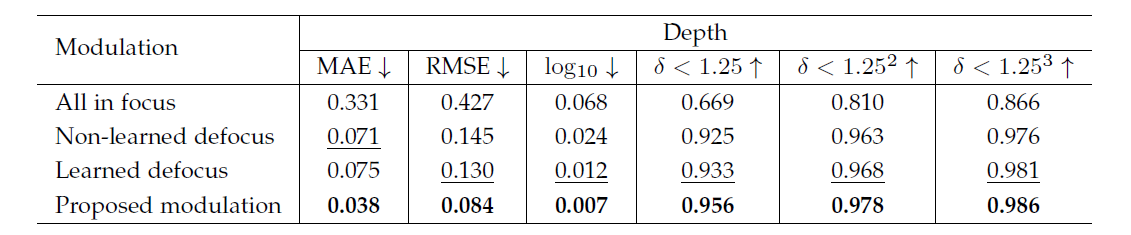

Table 3. Quantitative comparison for different modulations with PADNet on FlyingThing3D dataset.

Best results are in bold, second best are underlined.

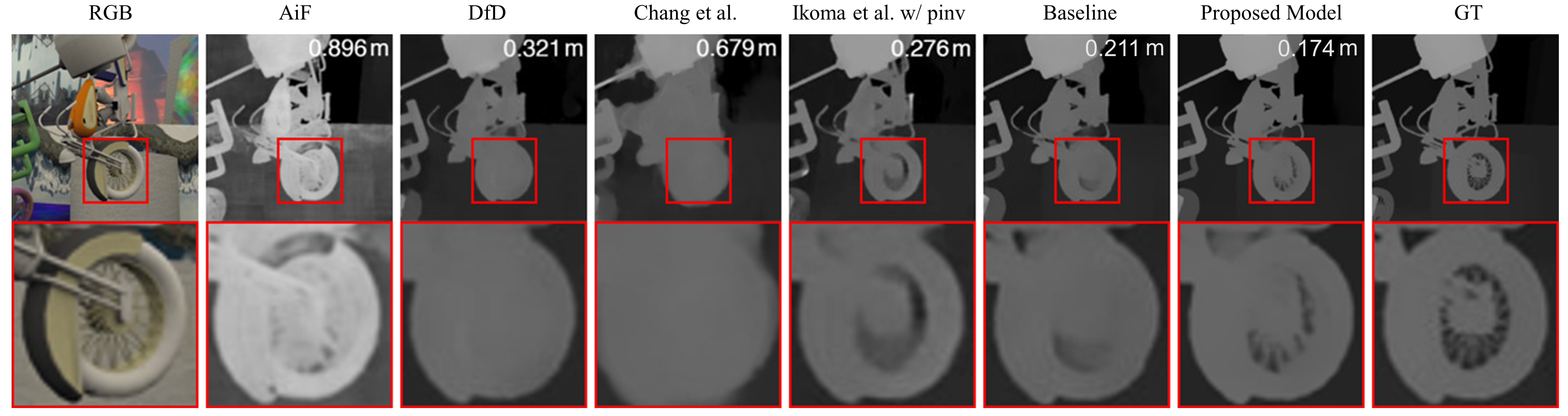

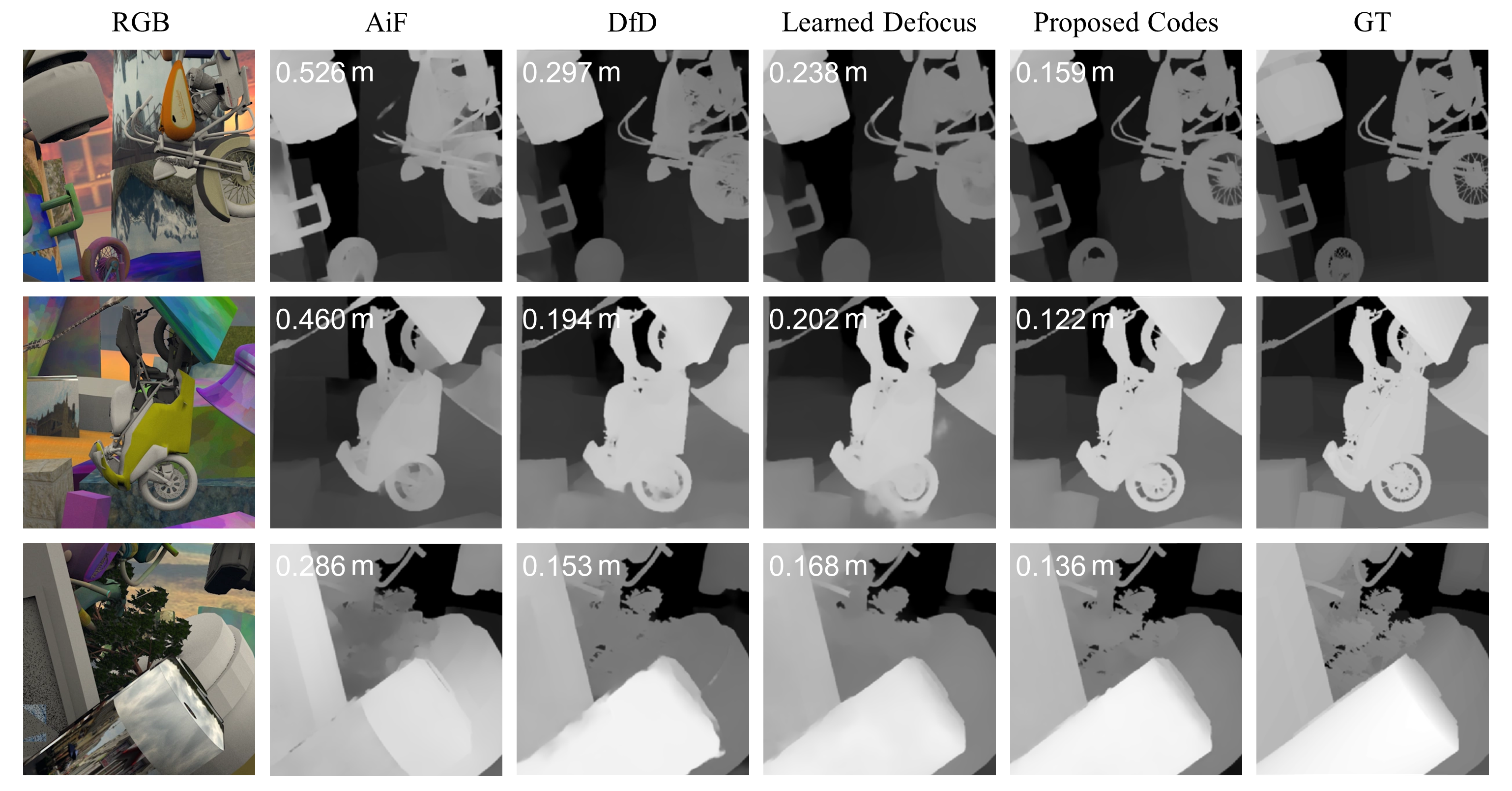

Figure 4. Qualitative comparison of our proposed method on the FlyingThings3D dataset against prior works.

RMSE of the depth map are shown on the top right.

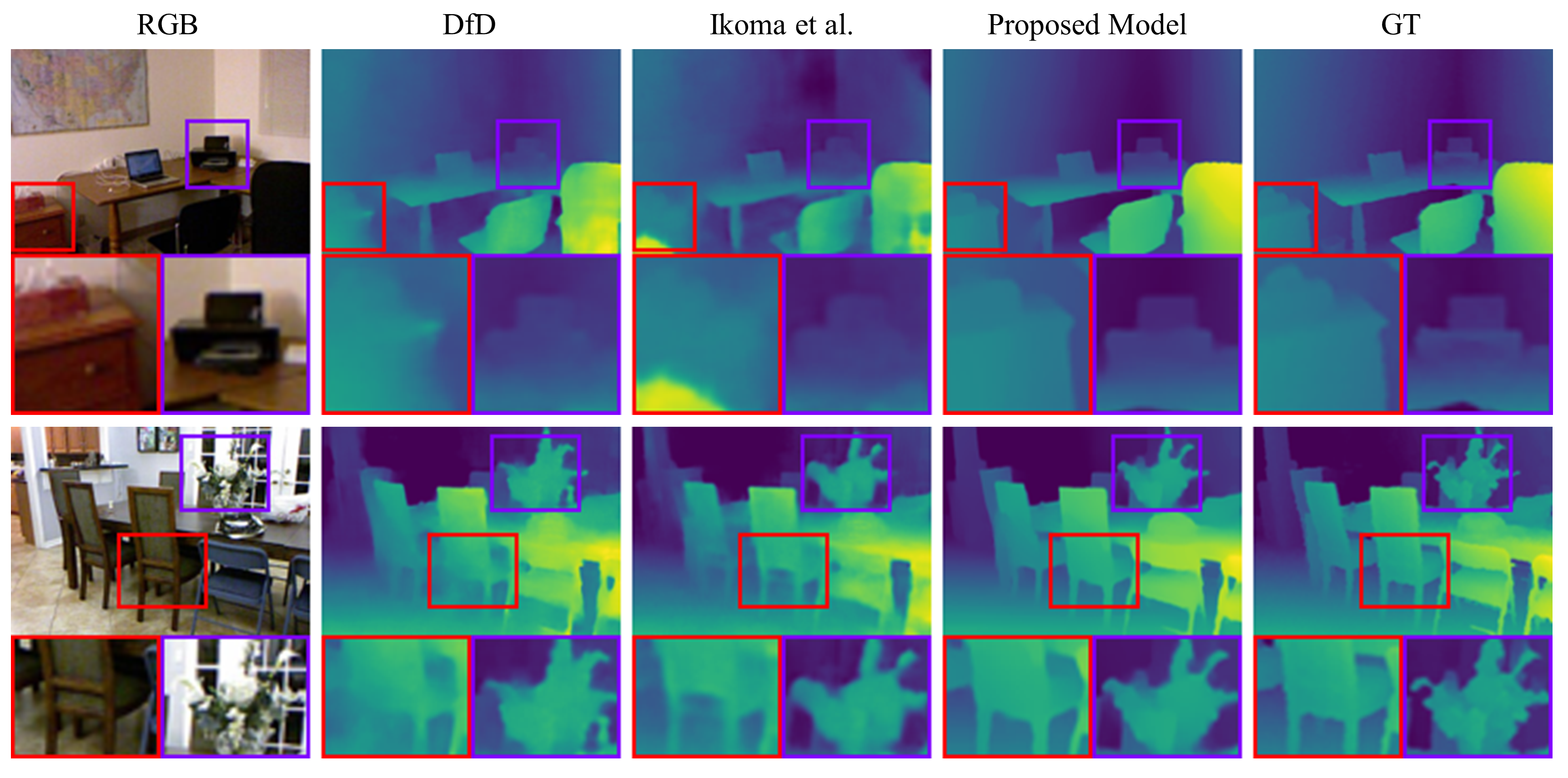

Figure 5. Qualitative comparison of our proposed method on the NYU Depth v2 dataset against prior works.

Figure 6. Qualitative comparison of our proposed modulation against other modulation schemes.

RMSE of the depth map are shown on the top left.

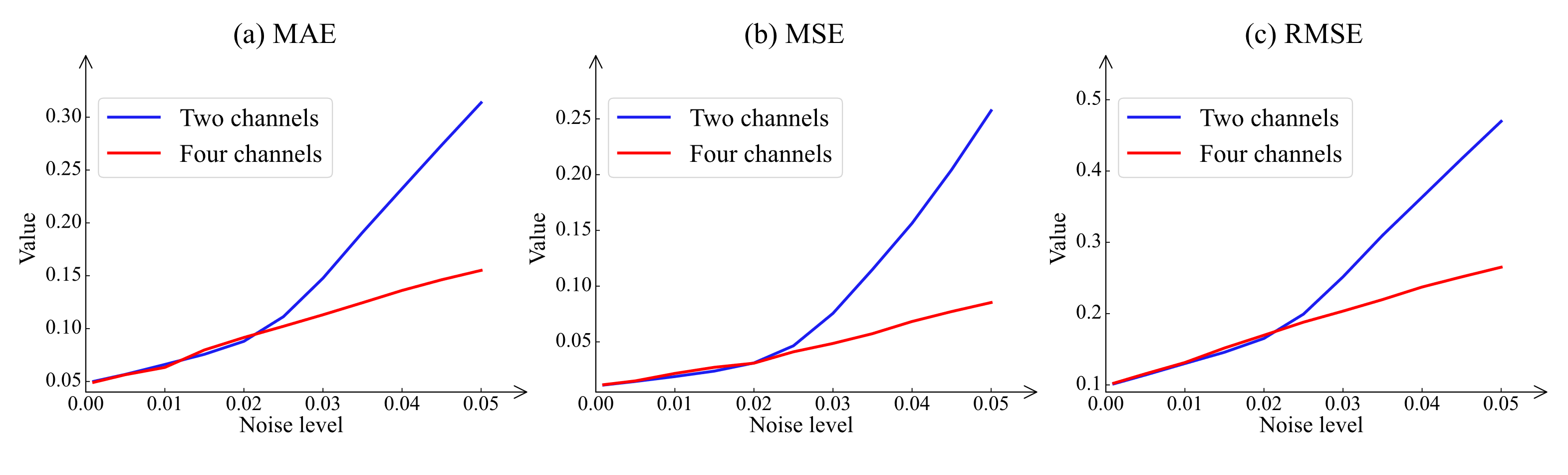

Figure 7. Quantitative comparison of four-channel and two-channel system at different noise levels.

Physical Experiment

Figure 8. The prototype and corresponding optical diagram of the proposed PoMI system.

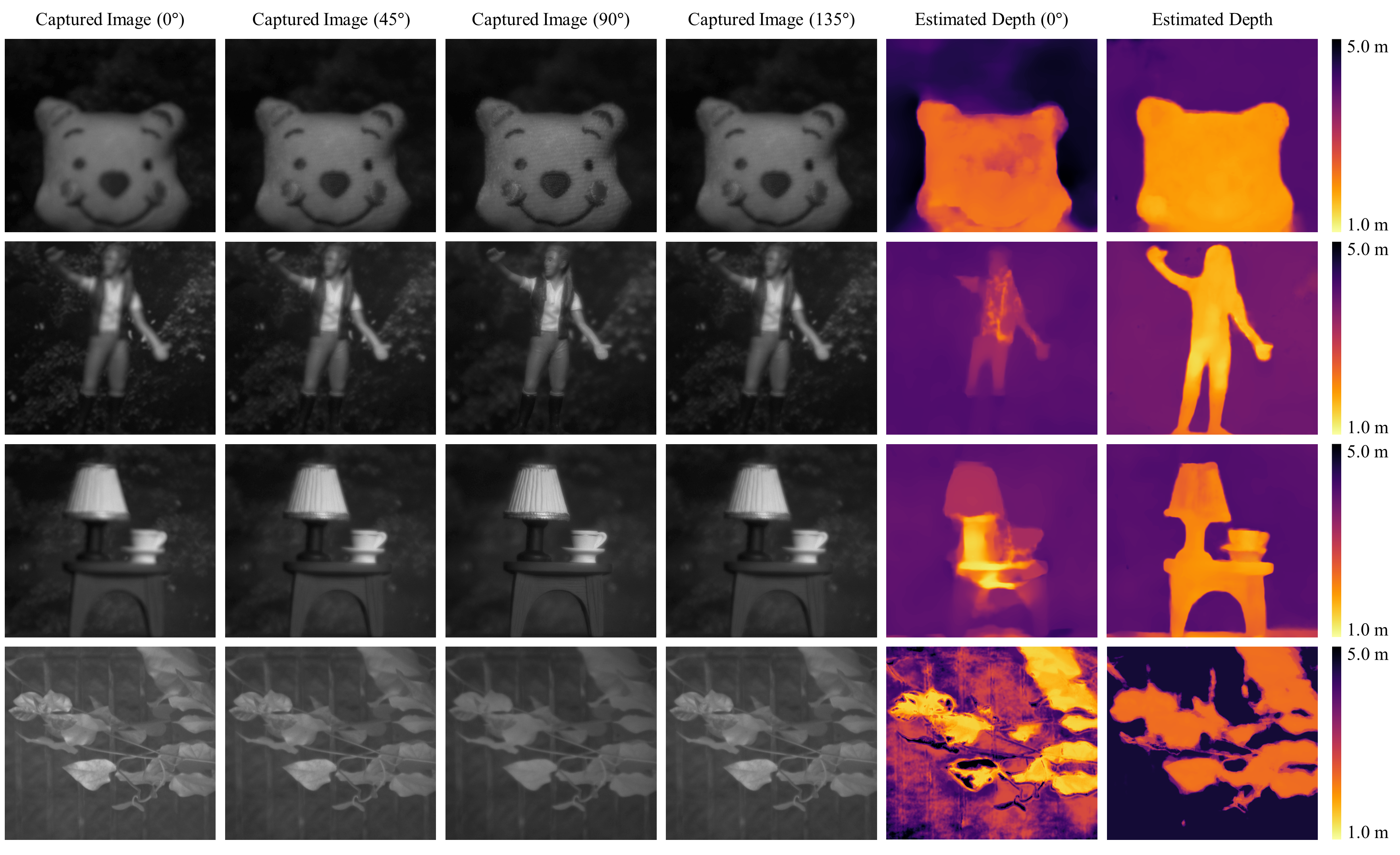

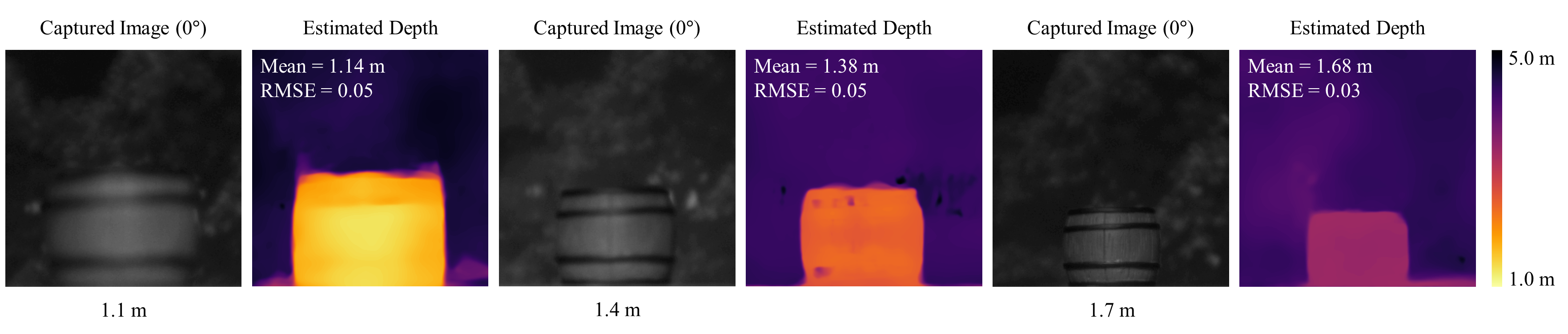

Figure 9. Experimental results on real capture data.

Figure 10. Quantitative analysis of physical experiments by our system prototype.

More Details